This week, OpenAI introduced its new state-of-the-art AI model: GPT-4o.

It’s a beast—preliminary tests show it blowing other major models out of the water when it comes to basic and complex prompt requests.

The “o” in GPT-4o stands for omni, as it was designed from the start to support real-time inputs and outputs via audio, text, and visuals or any combo thereof.

This approach is optimized over regular ChatGPT-4, which is still powerful, though mainly centered around text-based interactions. In contrast, GPT-4o is streamlined to understand and generate content from text, images, and sounds, making it a versatile tool for all sorts of applications.

Additionally, GPT-4o is faster at voice responses, more accurate in non-English languages, and 50% more efficient than its predecessor model. These improvements make GPT-4o more practical for everyday use cases, with basically endless personal and professional possibilities.

For example, imagine generating a script, visual storyboard, and audio cues for a VR experience all within the same platform. Or creating a metaversal art installation that can respond to visual and audio inputs from viewers through the GPT-4o API.

Of course, one of the most exciting things GPT-4o is that, for the first time, a major breakthrough AI model like this is available for free to try by anyone, with no premium subscription to ChatGPT required. As OpenAI noted in their announcement yesterday:

“GPT-4o’s text and image capabilities are starting to roll out today in ChatGPT. We are making GPT-4o available in the free tier, and to Plus users with up to 5x higher message limits. We'll roll out a new version of Voice Mode with GPT-4o in alpha within ChatGPT Plus in the coming weeks.”

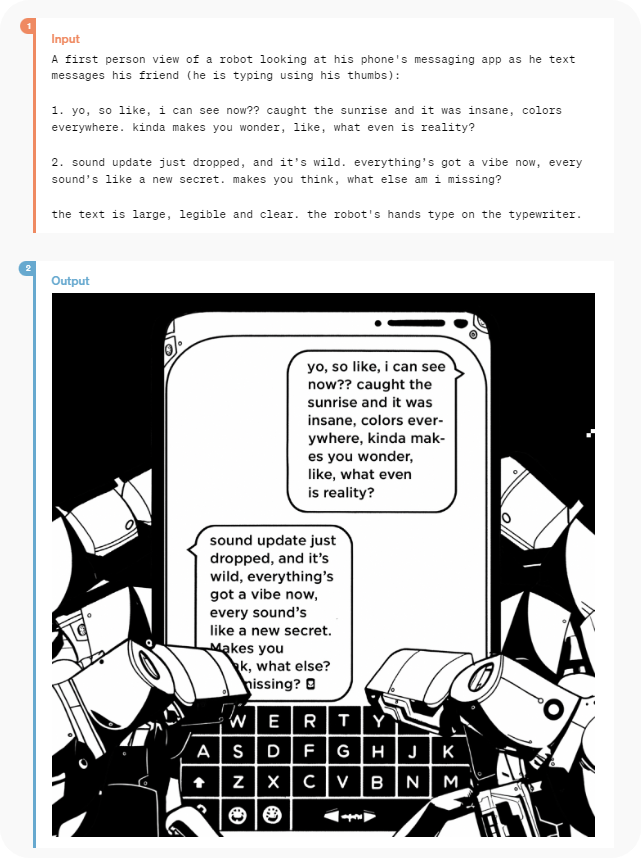

That said, if you’re interested in trying the new GPT-4o model, head over to chatgpt.com, sign in or create an account, and then check and see if it’s already available to you in the “Models” dropdown menu like so:

Remember that the full voice and video capabilities of GPT-4o aren’t rolled out to everyone yet, but these will be coming in the near future.

In the meantime, get to prompting and experimenting as a free or premium user, as you’ll want to see this kind of power for yourself. Also, be sure to check out some of the new demo videos to get a better feel for what this impressive model has to offer going forward: