Freysa's Enchanted Mobile App: Private AI on the Go

A few months ago, RSA posted about imagining a plug-and-play device that would let users leverage private, personal data with any LLM they chose — essentially a Ledger for AI. A turnkey hardware solution running local models that leading AI tools could treat as a cloud, delivering the same UX but with “bulletproof privacy.”

Sidebar: If you need a refresher on why privacy in AI proves absolutely critical here, consider these three points that I've been hammering home:

- Unencrypted during use — Data becomes most vulnerable at the exact moment it's most sensitive, when AI processes our business strategies, health concerns, and personal struggles

- Massive incentive for exploitation — While current policies may promise privacy, companies have every reason to change these and use your data for competitive edge, multiplying leak potential

- Insidious feedback loops — The training that can be done with our most intimate thought patterns proves terrifying to consider when taken with the for-profit nature of these companies, prompting visions of (at the very least) hyper- predatory social feeds, supercharged by AI. I choose not to consider the “worst” here

While I've covered various "private AI" solutions over recent weeks — from Venice to NilGPT — all boasting breakthroughs for privacy, they still fall short of this complete vision for equal levels of performance. One I believe will materialize in the not-too-distant future.

Toward this end, last week Ethereum’s flagship experimental AI organization, Freysa, released their Enchanted mobile app, offering two approaches to private AI on your phone:

- Open-source models like DeepSeek R1 and Llama 3.3 70B running inside Trusted Execution Environments (TEEs)

- Closed-source models accessed privately through layered anonymization

This closed-source privacy solution sets Enchanted apart from other apps I've covered recently. While Venice and NilGPT rely on open-source models that lag closed-source models in performance, Freysa’s anonymization approach allows users to have the best of both worlds, surfacing a solution which provides privacy alongside performance.

The team detailed how they accomplish this in "Reinforcement Learning for Privacy," outlining how they use a two-model system, equipped with custom-trained small language models (SLMs) that submit anonymized queries to leading closed source LLMs (OpenAI, Claude, etc.) on behalf of users.

It works like this:

- I send a query containing sensitive information to Freysa's SLM, running either remotely (in their Enchanted app) or on my computer locally (required technical expertise)

- That model replaces my sensitive data — bank accounts, job positions, etc.— with semantically equivalent placeholder words that maintain the sentence’s meaning and intent

- This sanitized prompt gets sent to a closed-source AI of my choice for processing

- The response returns to the local model, which "decodes" it, delivering closed-model quality with privacy intact

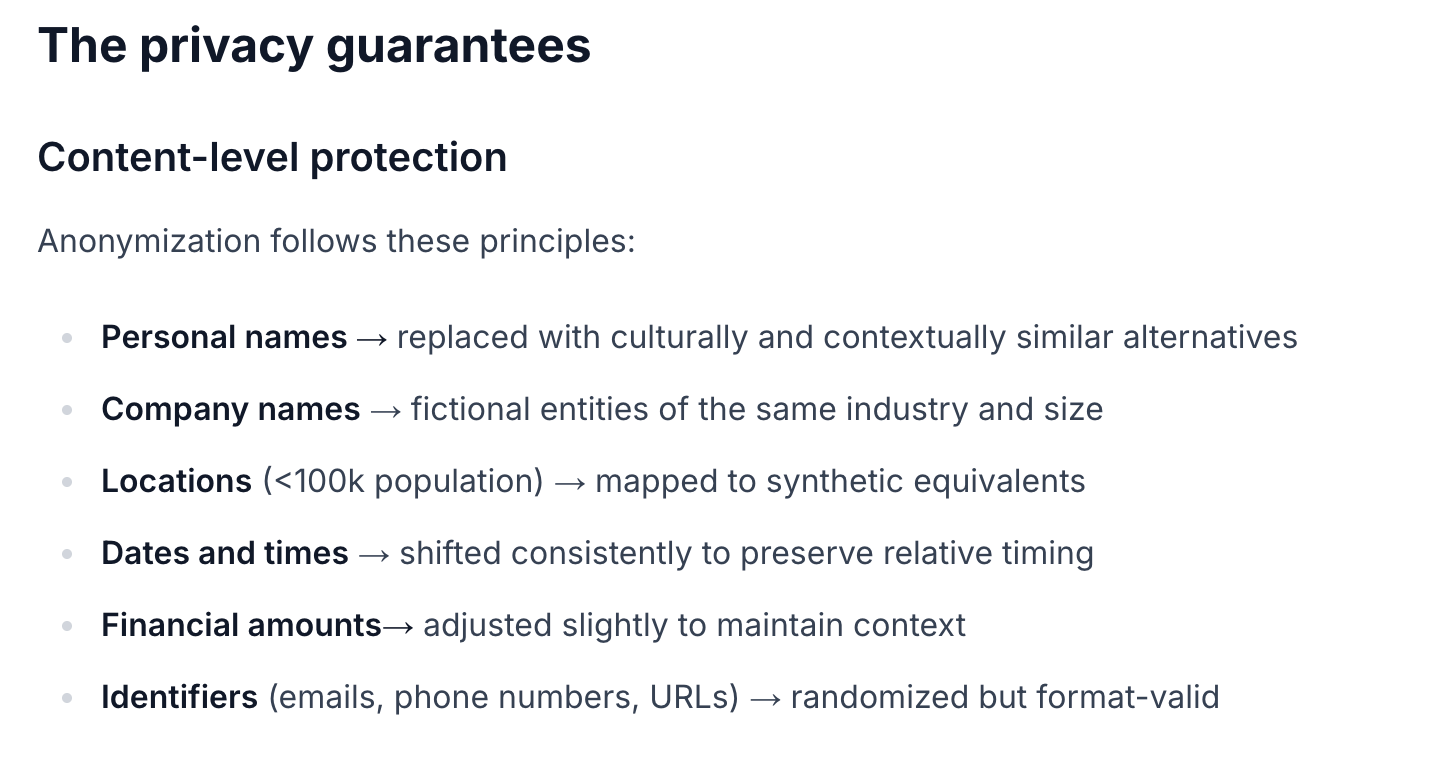

Freysa's model follows specific principles to distinguish between private and public information, anonymizing according to these guidelines:

While there is the risk of Freysa’s system hallucinating and mistaking private information for public (or vice versa), the team is confident their model's ability to distinguish between the two, pointing to the fact hat:

- LLMs already perform exceptionally well on anonymization tasks

- When benchmarked against GPT-4.1’s 9.77/10 score for anonymization, their model achieves up to 9.55/10, remarkably close despite being roughly 1000x smaller

Beyond the privacy protection achieved through anonymization, Freysa implements network-level safeguards via TEEs and traffic obfuscation to shield against outsiders attempting to "crack" their system's anonymity by tracking usage patterns. The team describes the end result as prompts appearing "as anonymous queries from different 'people'" — effectively masking individual users within a crowd.

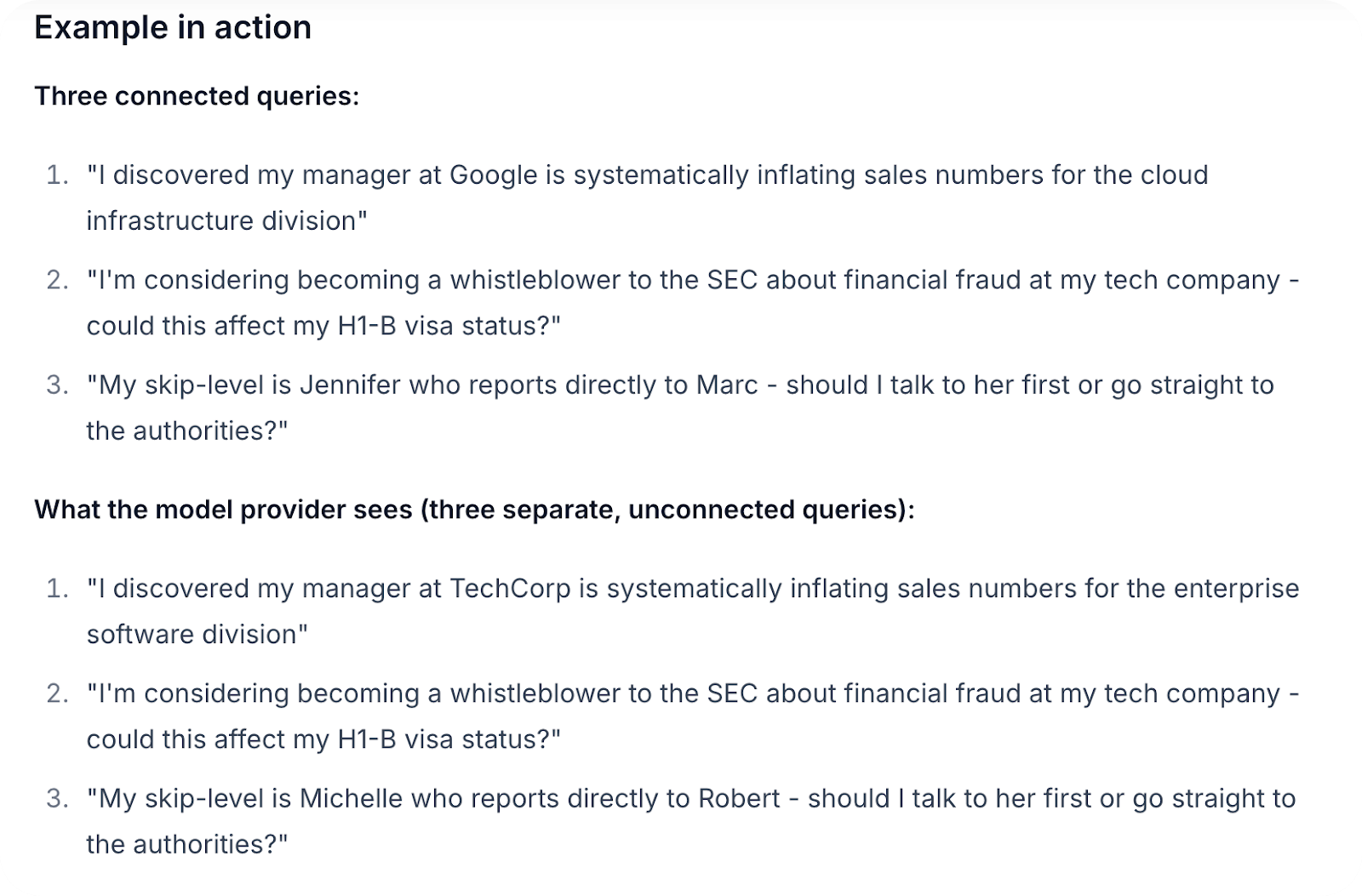

But nothing's perfect. After reviewing their blog, I felt the example prompts outlined could potentially be "decoded" by the companies behind the closed-source models, as the queries create a clear through-line (see example below).

These are current limitations that Freysa acknowledges, clarifying that:

- Users with highly unique sentence construction or query patterns could potentially be identified

- Network protection strength depends on relay network availability or TEE implementation

- Users must still trust Freysa's system to function as designed

The team has also shared what's coming next for Enchanted:

- Support for multimodal models (ones that can handle images, audio, and text)

- Smart routing system that automatically picks the best AI model for whatever you're asking

- Improving their anonymization model so it's small enough to run directly on your phone, means sensitive data never leaves your device in the first place, even in anonymized form

But despite these caveats, Freysa's system stands out as a unique method for reconciling the tension between privacy and performance, marking a major step in expanding the potential for private AI.

Given the open-source forward nature of crypto, plus the value set of sovereignty this space was built on, it makes sense that the future of private AI would come from our industry. While Freysa’s current model may not be exactly the “Ledger for AI” we envision, it marks a meaningful step in weaving privacy into the technologies we use everyday — always cleaner than adding a new one to the mix.

If you want to test this system out for yourself, try Enchanted or their models on Hugging Face for developers seeking deeper integration — the latter a decision which will certainly contribute to growth of private AI overall.